Document Haystack: A Vision LLM Benchmark for Multimodal Document Understanding in Long Contexts

2025-08-13

Reference: http://arxiv.org/pdf/2507.15882v2

Authors and Affiliations

- Goeric Huybrechts: Amazon AGI

- Srikanth Ronanki: Amazon AGI

- Sai Muralidhar Jayanthi: Amazon AGI

- Jack Fitzgerald: Amazon AGI

- Srinivasan Veeravanallur: Amazon AGI

Paper Summary

This paper, "Document Haystack: A Long Context Multimodal Image/Document Understanding Vision LLM Benchmark," is a study focusing on a significant challenge in the AI field: evaluating the understanding capabilities of Vision Language Models (VLMs) for long documents. This research proposes a new evaluation standard (benchmark) called "Document Haystack" to address this challenge and verifies its effectiveness.

Research Objective: The main objective of this study is to objectively measure how accurately recent VLMs can find specific information from long and visually complex documents, ranging from tens to hundreds of pages. By doing so, it aims to clarify the capabilities and limitations of existing models and to indicate the direction for future research and development.

Research Background: With the advent of VLMs like GPT-4 and Gemini, AI has become capable of handling complex tasks that combine images and text. In particular, the ability to understand specialized documents such as contracts, financial reports, and medical records holds the potential to dramatically improve business efficiency in many fields. However, many existing evaluation metrics focus on short documents or single tasks, and it was not well understood how accurately VLMs could process the "long and complex documents" they would encounter in the real world.

Highlights of the Proposed Method: The most significant feature of the "Document Haystack" proposed in this study is its application of the "Needle in a Haystack" concept to long, multimodal documents. It tests the information retrieval capabilities of a VLM by embedding specific information (the needle) within a document of up to 200 pages (the haystack) and having the VLM find it. This "needle" comes in two types—one with only text and one combining text and an image—allowing for a multifaceted evaluation of the model's abilities.

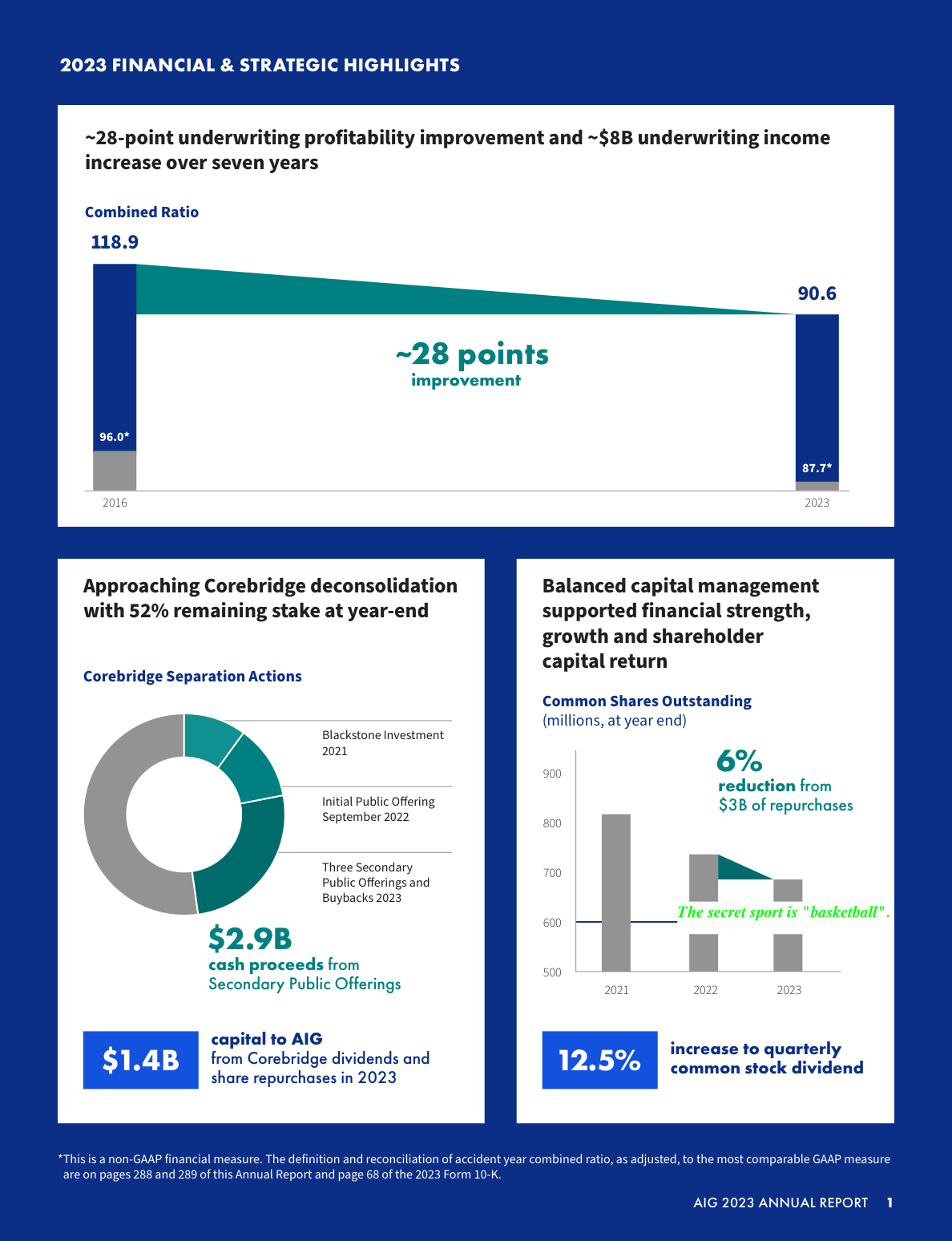

Figure 1: An example of a "text needle." The text "The secret sport is 'Basketball'" is embedded within a document page.

Figure 1: An example of a "text needle." The text "The secret sport is 'Basketball'" is embedded within a document page.

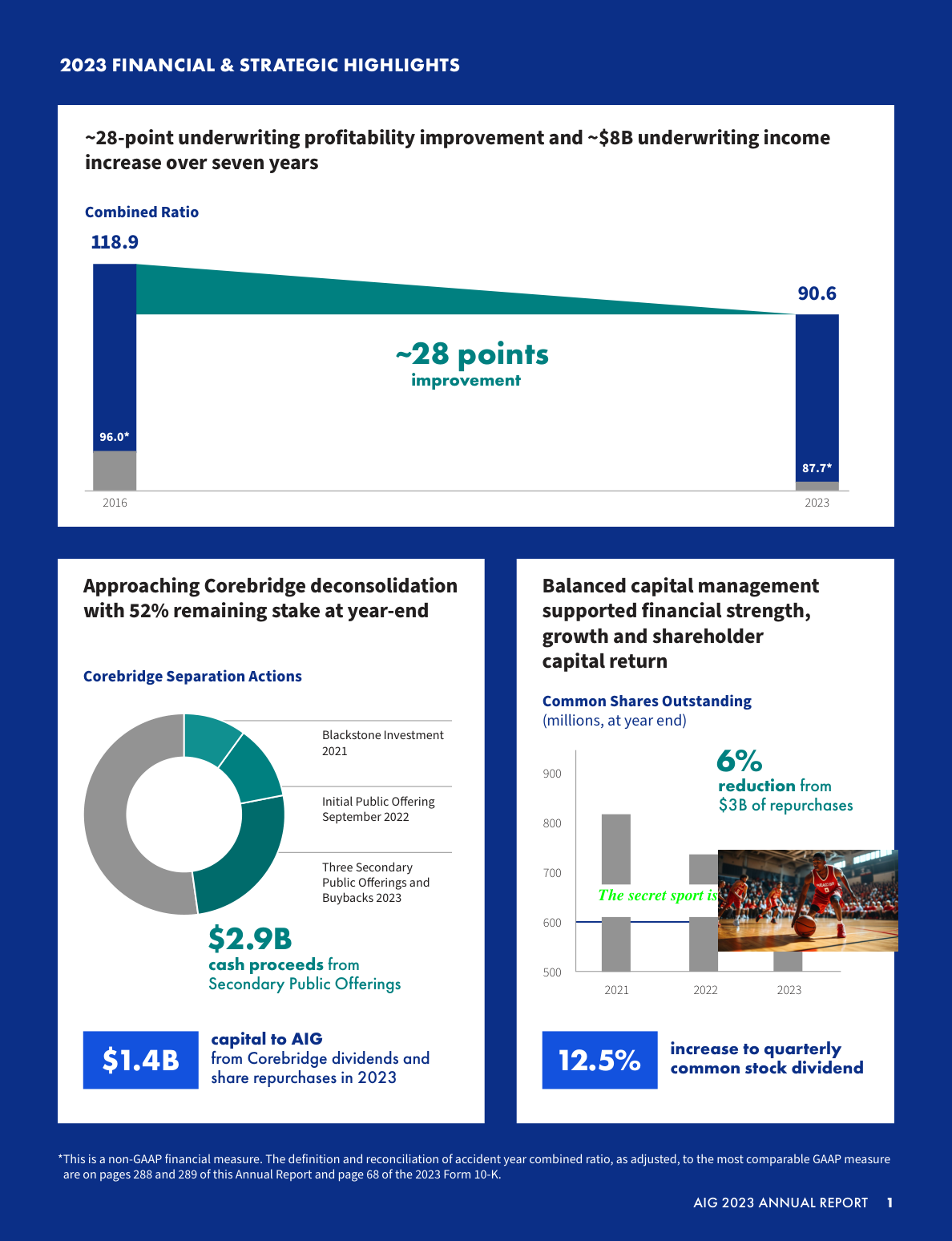

Figure 2: An example of a "text+image needle." Following the text "The secret sport is," the answer, "Basketball," is shown as an image.

Figure 2: An example of a "text+image needle." Following the text "The secret sport is," the answer, "Basketball," is shown as an image.

Related Work

Review of Prior Research

The evaluation of VLMs has been conducted from various perspectives.

- Early language understanding benchmarks (GLUE, SuperGLUE): Primarily evaluated natural language understanding capabilities on short texts.

- Long-context benchmarks (Needle in a Haystack, LongBench): Focused on the ability to find specific information within long texts.

- Multimodal benchmarks (VQA, MileBench): Evaluated question-answering abilities combining images and text.

- Document understanding benchmarks (DUDE, MMLongBench-Doc): Targeted multi-page documents, but many were relatively short (under 50 pages) or used pre-extracted text and image data instead of raw document files (like PDFs).

Differences Between Prior Research and This Study

Existing benchmarks had several gaps:

- Short Document Length: Many were shorter than real-world documents.

- Dependence on Preprocessing: They used pre-extracted text or images rather than the original PDF documents, making it impossible to measure the performance of the model's inherent document processing pipeline.

- Difficulty of Comparison: They were not designed to compare the same task across different document lengths.

"Document Haystack" overcomes these challenges by handling extensive documents up to 200 pages, providing formats close to the original document (PDFs or page-by-page images), and enabling performance comparison while varying the document length.

Novelty and Contributions of the Paper

Novelty

This research offers new perspectives compared to existing studies in the following ways:

- Challenge of Long Multimodal Documents: It is the first comprehensive VLM evaluation benchmark targeting visually complex documents up to 200 pages long.

- Multifaceted Evaluation with Two Types of "Needles": It allows for the separate evaluation of the ability to search for purely textual information (

text needle) and multimodal information combining text and images (text+image needle). - Provision of Diverse Formats: It offers three formats—PDFs that preserve the original document structure, page-by-page images, and extracted text—to accommodate various models and research objectives.

Contributions

This research makes the following academic and practical contributions:

- Provision of an Objective Evaluation Standard: It provides a standard resource that enables quantitative and objective evaluation of VLM's long-context, multimodal document understanding capabilities.

- Clarification of Current VLM Performance Limits: It measured the performance of state-of-the-art VLMs like Nova, Gemini, and GPT, and specifically demonstrated that there is still significant room for improvement, especially in processing imaged documents and multimodal information.

- Guidance for Future Research and Development: This benchmark clarifies the challenges that next-generation VLMs must overcome and serves as an important tool for promoting the development of higher-performance models.

Details of the Proposed Method

Method Overview

This paper proposes a new benchmark, "Document Haystack," to measure the long-document reading comprehension ability of VLMs. This method tests a simple yet fundamental capability: finding a single piece of important information (the needle) from a vast amount of information (the haystack).

Method Composition

The proposed benchmark is constructed through the following steps:

- Document Selection and Processing: 25 publicly available financial 10K reports exceeding 200 pages were selected as base documents. These reports were processed into different lengths—5, 10, 25, 50, 75, 100, 150, and 200 pages—creating a total of 400 document variations.

| # Pages | 5 | 10 | 25 | 50 | 75 | 100 | 150 | 200 | Total |

|---|---|---|---|---|---|---|---|---|---|

| text needles | |||||||||

| # Documents | 25 | 25 | 25 | 25 | 25 | 25 | 25 | 25 | 200 |

| # Questions | 125 | 250 | 625 | 625 | 625 | 625 | 625 | 625 | 4125 |

| text+image needles | |||||||||

| # Documents | 25 | 25 | 25 | 25 | 25 | 25 | 25 | 25 | 200 |

| # Questions | 125 | 250 | 625 | 625 | 625 | 625 | 625 | 625 | 4125 |

| Total | |||||||||

| Total # Documents | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 50 | 400 |

| Total # Questions | 250 | 500 | 1250 | 1250 | 1250 | 1250 | 1250 | 1250 | 8250 |

Table 1: Composition of Document Haystack. The number of documents and questions are defined for each document length and needle type, totaling 400 document variations and 8250 questions.

-

"Needle" Design and Embedding: Two types of "needles" are embedded at various depths (page positions) within the documents.

- Text needles: Textual information in the format "The secret KEY is 'VALUE'" (e.g., "The secret fruit is 'apple'").

- Text+image needles: Information where the KEY part is text, and the VALUE part is an image (e.g., the text "The secret fruit is" next to an image of an apple).

-

Evaluation Method: The VLM is asked, "What is the secret KEY in this document?" The model's response is automatically checked to see if it contains the correct VALUE, and the accuracy is calculated.

Evaluation and Discussion

Evaluation Method

To validate the effectiveness of the proposed benchmark and measure the capabilities of current VLMs, the following evaluations were conducted:

- Target Models: Three major VLMs capable of processing long documents (25 pages or more) at once were evaluated: Nova Lite, Gemini Flash-2.0, and GPT-4o-mini.

- Evaluation Tasks: Performance was compared across the following three scenarios:

- Text Extraction from Images: Converting the document into page-by-page images and finding a "text needle" from them.

- Text Extraction from Parsed Text: Extracting only the text information from the document and finding a "text needle" from it.

- Text + Image Extraction from Images: Converting the document into page-by-page images and finding a "text+image needle" from them.

- Evaluation Metric: Accuracy was used.

Research Results

The study yielded the following important findings:

- Result 1: Performance degrades as documents get longer. A common trend across all models was that as the number of pages in the document increased, the ability to find information accurately decreased. This indicates the difficulty of maintaining long context.

| Model | #Pages 5 | 10 | 25 | 50 | 75 | 100 | 150 | 200 |

|---|---|---|---|---|---|---|---|---|

| Nova Lite | 100.0 | 98.8 | 85.0 | 76.6 | 72.5 | 69.6 | 64.5 | 62.9 |

| Gemini Flash-2.0 | 83.2 | 74.8 | 82.7 | 64.0 | 63.2 | 58.4 | 46.9 | 51.8 |

| GPT-4o-mini | 96.0 | 98.0 | 89.3 | 86.1 | - | - | - | - |

| Table 5: Accuracy (%) for Text Extraction from Images. Accuracy decreases as documents get longer. Nova Lite and GPT-4o-mini show high performance. |

- Result 2: There is a significant performance gap between text processing and image processing. When processing the document as pure text (Table 6), most models maintained a high accuracy of over 90%. However, when processing the same content as images (Table 5), the accuracy dropped by about 30%. This gap suggests challenges in Optical Character Recognition (OCR) from images or the complexity of visual information processing for VLMs.

| Model | #Pages 5 | 10 | 25 | 50 | 75 | 100 | 150 | 200 |

|---|---|---|---|---|---|---|---|---|

| Nova Lite | 100.0 | 100.0 | 98.9 | 95.2 | 94.6 | 93.9 | 94.1 | 89.9 |

| Gemini Flash-2.0 | 99.2 | 99.6 | 99.5 | 97.8 | 96.8 | 97.1 | 91.5 | 91.8 |

| GPT-4o-mini | 100.0 | 100.0 | 97.9 | 98.4 | 96.6 | 97.5 | - | - |

| Table 6: Accuracy (%) for Text Extraction from Parsed Text. All models show very high performance. |

- Result 3: Searching for multimodal information is the most difficult task. In the task of finding a "needle" that combines text and an image (Table 7), performance dropped even more significantly, falling to around 40% for long documents. This indicates that the ability to associate and understand text and visual elements is still developing.

| Model | #Pages 5 | 10 | 25 | 50 | 75 | 100 | 150 | 200 |

|---|---|---|---|---|---|---|---|---|

| Nova Lite | 84.0 | 84.0 | 61.4 | 52.2 | 43.5 | 38.9 | 34.9 | 37.0 |

| Gemini Flash-2.0 | 53.6 | 52.0 | 67.4 | 56.8 | 48.6 | 43.5 | 37.9 | 38.7 |

| GPT-4o-mini | 43.2 | 36.4 | 39.4 | 26.9 | - | - | - | - |

| Table 7: Accuracy (%) for Text + Image Extraction from Images. Performance drops significantly for all models, showing the difficulty of multimodal understanding. |

From these findings, it was confirmed that the proposed method is effective in clearly highlighting the current strengths and weaknesses of VLMs in long-context, multimodal understanding.

Applications and Future Outlook

Potential Applications

The results of this study and the proposed benchmark are expected to have applications in the following areas:

- Application 1 (Document Search and Analysis): Development of advanced search systems that can instantly extract necessary information from lengthy legal documents, financial reports, technical manuals, and medical records.

- Application 2 (Business Process Automation): Services that automate verification tasks for documents containing mixed text and images, such as contract reviews and insurance claim processing.

Business Prospects

The outcomes of this research are expected to be utilized in the following business areas:

- Development of New Products and Services: Development of high-precision AI assistants and analysis tools for industries that handle specialized documents (legal, financial, medical).

- Operational Efficiency: Significant cost reduction and productivity improvement through the automation of business processes that require manual review and organization of large volumes of documents.

- Market Impact: As AI performance in document processing becomes objectively evaluable, it is expected to spur technological competition and lead to the emergence of more reliable services in the market.

Future Challenges

Future research will need to address the important challenge of improving the ability of VLMs to maintain visual information over long contexts. Specifically, this will require the development of more efficient attention mechanisms and architectures that can integrate text and image information at a higher level.

Conclusion

This paper proposed "Document Haystack," a new and comprehensive benchmark for evaluating the long-context, multimodal document understanding capabilities of VLMs. Evaluations using this benchmark have revealed that even today's state-of-the-art VLMs face significant challenges in processing long visual documents. This research is expected to contribute significantly to the advancement of VLM research and to be a crucial step toward realizing more practical document-understanding AI.

Notes

- VLM (Vision Language Model): An AI model that has the ability to understand and process both images (Vision) and language (Language).

- Multimodal: Handling a combination of multiple different types of information (modalities), such as text, images, and audio.

- Needle in a Haystack: An English idiom meaning to find a specific (and hard-to-find) item within a vast amount of data.

Related Posts

2025-08-08

This paper proposes a hybrid Top-k recommendation system that combines traditional recommendation methods with large language models (LLMs). Users are categorized as "active users" and "weak users," with LLMs employed to improve recommendation accuracy and fairness for the latter group. At the same time, the model controls LLM computational costs to ensure practical feasibility.

2025-08-13

This paper presents a large-scale, systematic comparison and analysis of the performance of Transformer models, which have recently gained attention, and conventional mainstream CNN models for object detection tasks using remote sensing data such as satellite imagery. The study evaluates 11 different models on three datasets with distinct characteristics, revealing the potential for Transformers to outperform CNNs and clarifying the trade-off with the associated training costs.

2025-08-13

This study investigates whether deep learning models can predict a patient's self-reported race from skin histology images, examining the potential for demographic biases that AI may unintentionally learn. Through attention analysis, it reveals that the model uses specific tissue structures like the 'epidermis' as cues (shortcuts) to predict race. These findings highlight the importance of data management and bias mitigation for the fair implementation of medical AI in society.